You already know why search engine results matter. Rankings affect traffic, leads, and revenue. Decisions based on bad data lead to wasted time and wrong strategy. I have worked with enough search data projects to know that the source of your SERP data matters as much as how you use it.

My approach stays simple. I look at accuracy, stability, coverage, and how much effort it takes to maintain the setup. I also look at how a provider handles blocks, location targeting, and scale. This guide walks you through how I think about SERP scrapers, tracking APIs, rank APIs, and free options, and why choosing the right platform saves you problems later. You will also see why many teams rely on tools like a serp rank api or compare options to find the best serp api for long term use.

What a SERP scraper actually does

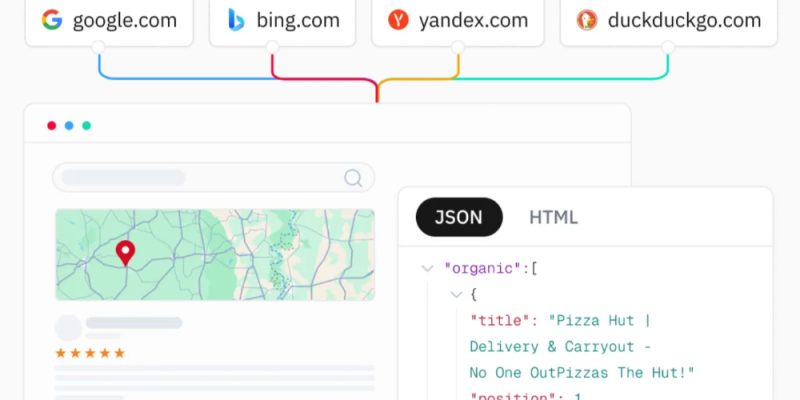

A SERP scraper pulls search engine result pages and returns the data in a usable format. That data can include rankings, URLs, titles, snippets, ads, maps, and related searches.

I always tell people to separate scraping logic from data use. Your scraper should focus on one job.

- Fetch results reliably

• Avoid blocks and captchas

• Match real user locations

• Return clean structured data

If any of these fail, your tracking breaks. Manual scraping scripts fail fast once volume increases. That is where a SERP scraper API becomes useful.

SERP scraper API vs SERP tracking API

These two get mixed up often. I see this mistake a lot.

A SERP scraper API focuses on raw result retrieval. You send a query, location, device type, and search engine. You receive the full result set.

A SERP tracking API focuses on monitoring rankings over time. It tracks position changes, volatility, and history.

The best systems support both. I prefer APIs that let you pull raw results and also support tracking logic on top. This keeps your workflow flexible. You can build reports, alerts, or models without switching providers.

Why a SERP rank API matters for scale

Tracking a few keywords looks easy. Scaling to thousands across multiple locations changes everything.

A SERP rank API solves common problems:

- Accurate geo simulation at city or state level

• Consistent rankings across requests

• Stable success rates at volume

• No manual proxy management

I care about consistency more than speed. One failed batch ruins trend data. Rank tracking only works when results stay predictable.

How to judge the best SERP API

I use the same checklist every time.

Data accuracy

Does the API return what a real user sees. This includes ads, maps, and local packs when needed. Location accuracy matters here.

Anti block handling

Search engines block fast. The provider should handle rotation, headers, sessions, and browser behavior without you touching it.

Location and device control

You should control country, region, city, desktop, and mobile. Anything less limits your analysis.

Output format

Clean JSON matters. I avoid APIs that require heavy parsing. Structured results save time and reduce bugs.

Pricing logic

Paying only for successful requests matters. Failed calls should not count.

Thoughts on SERP API free options

Free tools look attractive early. I understand why people try them.

Here is what usually happens.

- Limited daily requests

• Incomplete results

• Missing local accuracy

• High block rates

Free SERP APIs help with testing concepts. They rarely work for production tracking. Once real decisions depend on the data, free options fall short.

I recommend treating free tools as experiments only.

Why Thordata fits serious SERP work

When I evaluate platforms, I focus on infrastructure. Thordata stands out because they control the full stack.

They operate a global proxy network with more than 60 million IPs across 190 countries. This matters for SERP data because location accuracy drives ranking accuracy. Their system supports residential, mobile, ISP, and datacenter IPs with precise targeting.

Their real time SERP API handles JavaScript rendering, anti bot systems, and session management automatically. That reduces maintenance work. The output stays clean and structured, which helps when feeding dashboards or models.

Performance also matters. High uptime and strong success rates reduce data gaps. Their pay only for success pricing aligns incentives with results.

They design the platform for scale. Developers get API based control, sub accounts, and clear documentation. Enterprises get account support focused on stability and IP quality. Compliance also stays central, with attention to GDPR and CCPA standards.

This combination makes their SERP scraper API suitable for tracking, analysis, and automation without constant tuning.

How I suggest using a SERP tracking API

I keep workflows simple.

- Define keyword sets clearly

• Lock locations and devices

• Track at consistent intervals

• Store raw results alongside rankings

This approach protects you from algorithm changes and layout shifts. When search results change format, raw data helps explain rank movement.

A stable SERP tracking API lets you focus on interpretation rather than debugging.

Final guidance

If rankings influence your strategy, data quality matters. SERP scraping fails quietly when done wrong. The right SERP API removes friction, protects accuracy, and supports growth.

I look for reliability, location control, and low maintenance. Platforms built for scale outperform scripts and free tools fast. Thordata fits teams that value clean data and long term stability without unnecessary complexity. Choose tools that support how you work today and how you plan to grow. That mindset keeps your tracking useful and your decisions grounded in reality.

Comments